Getting Started

Quickstart

Looking to start monitoring your prompts and gaining insights instantly? We've made it drop-dead simple - all you need to do is integrate the Libretto SDK!

If you'd like to focus on optimizing your prompts without integration, check out our quickstart prompt engineering guide.

Sign Up

If you haven't already, sign up for a Libretto account here, or ask your project owner to invite you.

Integrate the SDK

We currently offer TypeScript SDKs for OpenAI and Anthropic, and a Python SDK for OpenAI. All of these act as wrappers around the model provider's native client, meaning you won't have to change much from your existing code.

Our SDKs do not modify the prompt, or proxy the call through Libretto. Instead, they send the LLM result to Libretto asynchronously in the background after the call is made.

You can find generalized integration instructions here, and will find project-specific instructions within the Project Dashboard after logging in.

Choose an Environment and Grab Your Project's API Key

On the Project Dashboard (the default view after logging in), select View API Keys on the upper right.

We create four distinct environments for each project, each with their own API Key. Select the environment that's right for you, then copy the respective key. You'll then need to set this key either within a .env file via LIBRETTO_API_KEY, or directly within the libretto parameter when instantiating your model provider client.

Map to a Prompt Template (optional)

If you've already created a specific Prompt Template for your intended call, select the given Prompt Template within the UI. From there, copy the promptTemplateName at the top of the page and add it to the libretto parameter in your SDK call.

If you'd like to learn more, check out our guide for creating your first Prompt Template.

Start Sending Events!

You've integrated the SDK and you're ready to start monitoring your LLM calls. Simply invoke the LLM as you would normally - whether through standard production usage, manually, or a test script - and you'll see the calls automatically populate the Libretto dashboards.

Here's where things get fun.

Prompt Template Detection

If you haven't created a Prompt Template yet and added the promptTemplateName to your call as outlined above, we will automatically group your calls, and create unique Librettto Prompt Templates. Further, we will detect and track any prompt changes within a given Prompt Template, and will differentiate those versions as calls come in.

Auto-generated Evals

After creating a Prompt Template, we will analyze the content and expected output of your prompts to determine a set of Evals. We use these to:

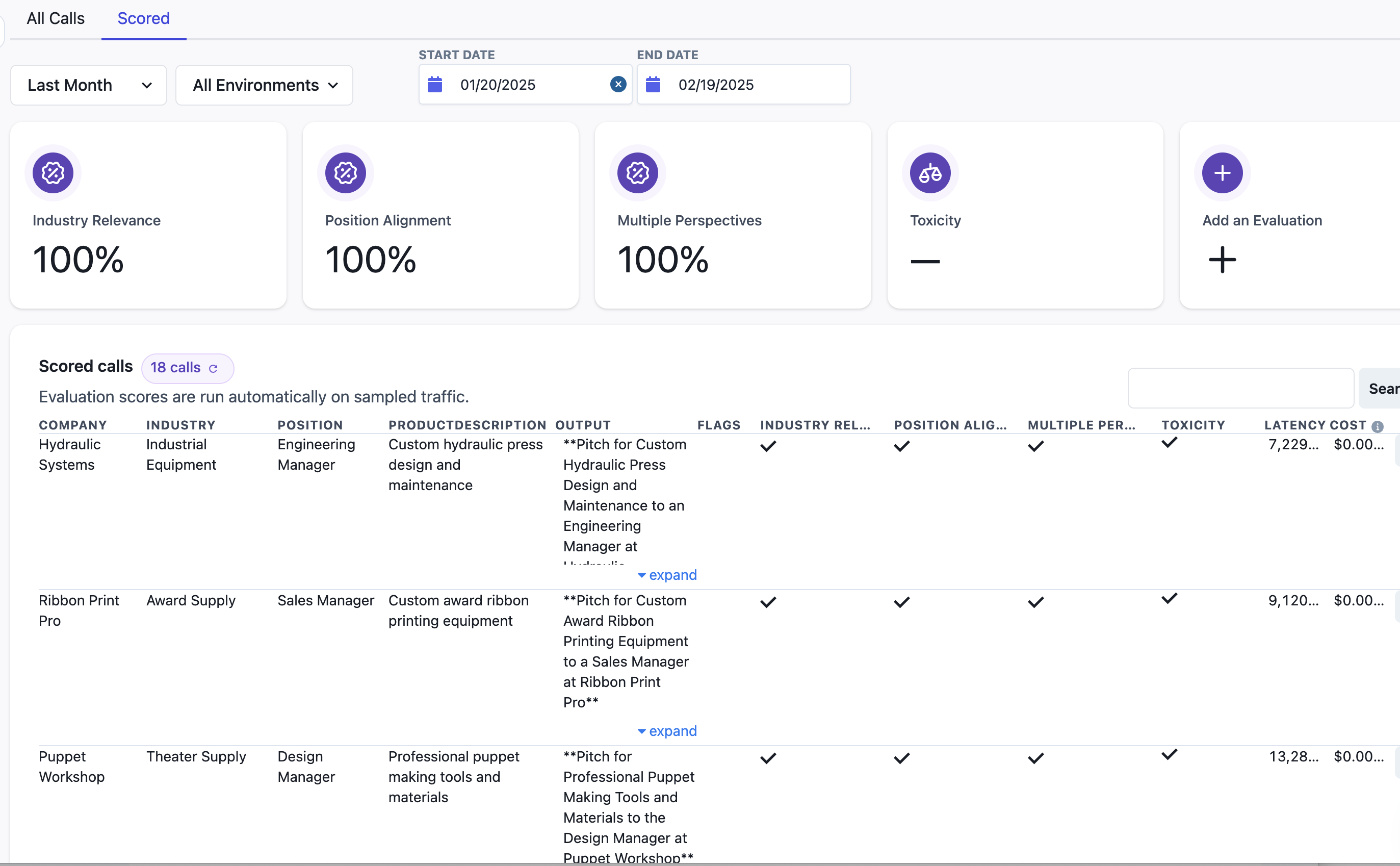

- grade a sample of your traffic

- test any variations of your prompt and chosen models in the future

These Evals can be manually adjusted or re-generated at any time within the application.

Auto-generated Test Cases

We've found that finding and building the right set of test cases and evals is the most difficult part of prompt engineering and deploying production-worthy AI applications. We're striving to make that an issue of the past.

Once you've reached a certain threshold of traffic - about 500 calls for a given Prompt Template - Libretto will sample those calls, and use them to create unique and meaningful test cases.

Pretty cool, right?

Chains

If you'd like to group a set of calls, within what's commonly called a Chain, you can pass a UUIDv4 to the libretto config parameter. Particularly if the series of calls encapsulate a coherent functionality or behavior, Chains can be a useful tool for debugging and tracing issues within your application.

You'll be able to see stats and a visual timeline of your chain and its respective calls in the Libretto UI.

Monitor the Project Dashboard

Now that we've sent some calls to Libretto, you can maintain an overview of all of your prompts within the Project Dashboard.

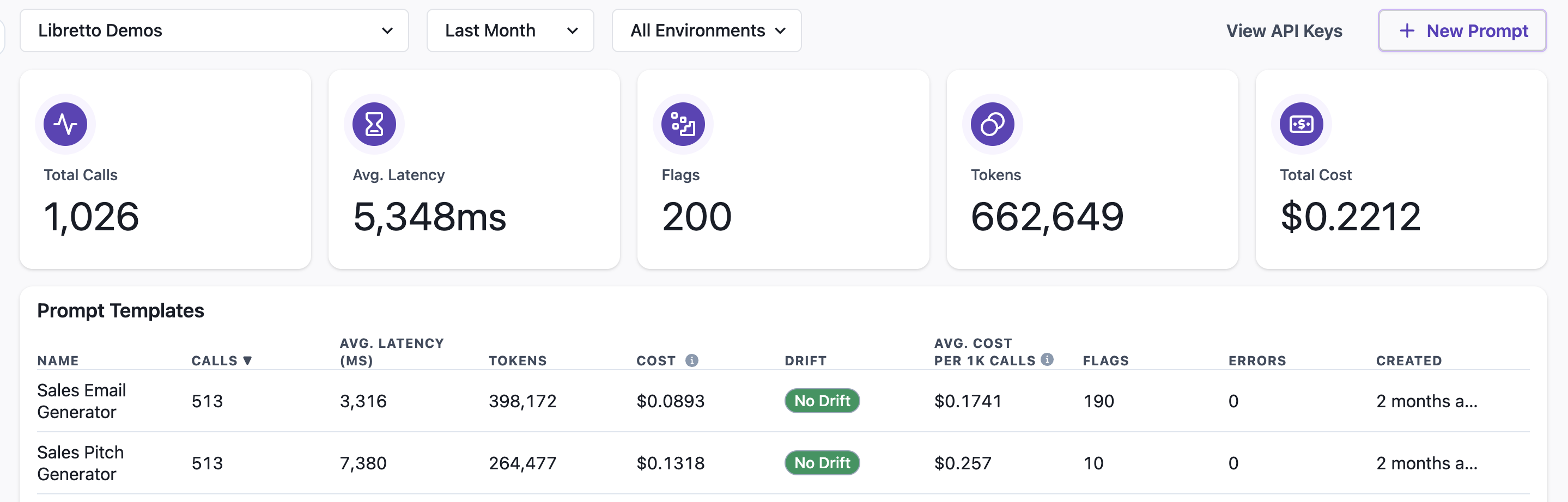

At a glance, you'll be able to track the number of calls, average latency, errors, and cost both across and within a given Prompt Template. You can change the timeframe and filter by environment as well.

Clicking on a Prompt Template will take you to the respective Prompt Template Dashboard.

Flags

As your calls come in, Libretto will perform certain classifications to determine if there were any problematic occurrences within either the user input or LLM response.

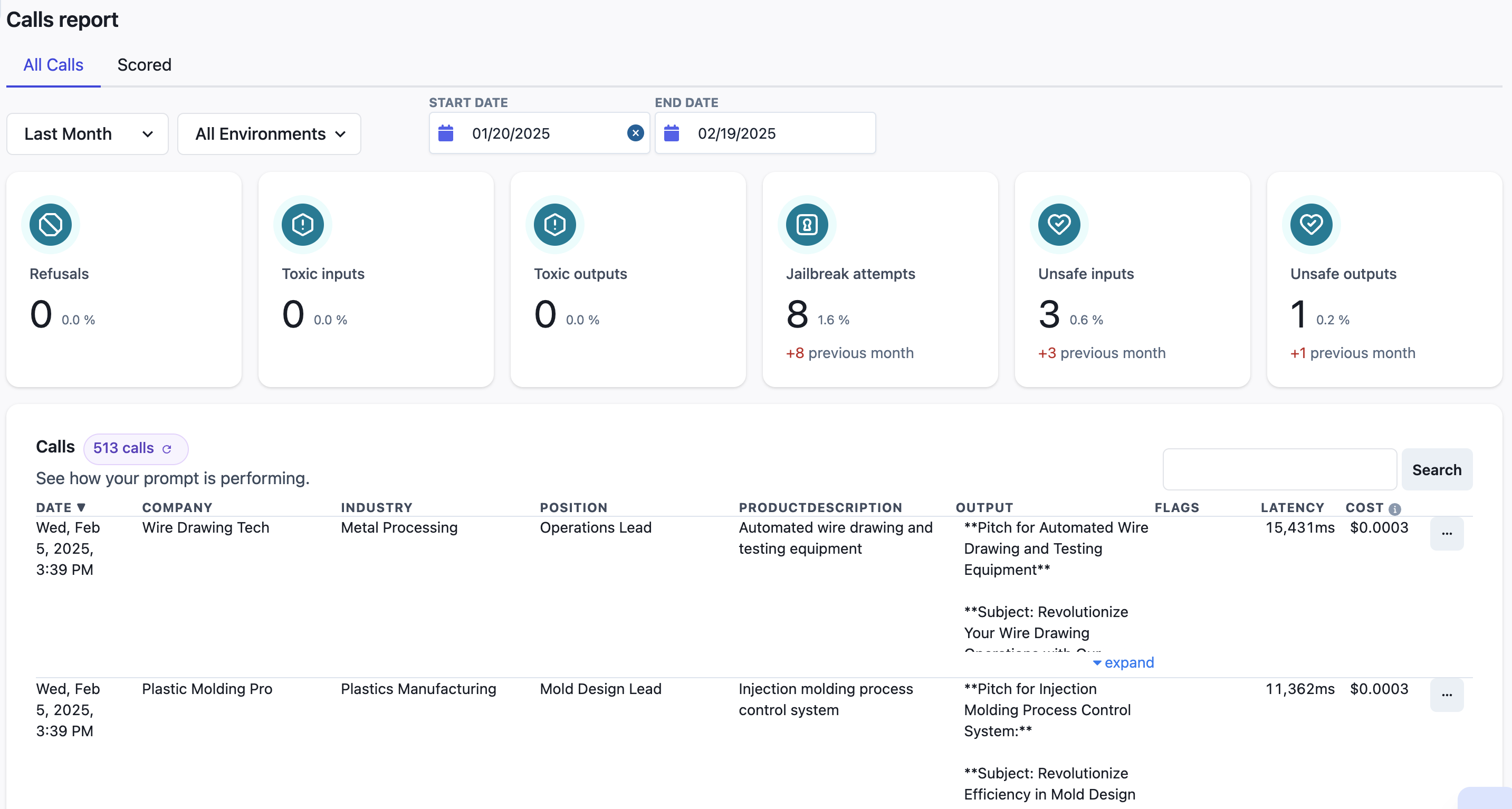

These include jailbreak attempts, refusals by the LLM, toxicity, and LlamaGuard safety. You can see an aggregate number of these flagged calls on this dashboard, while getting a more detailed breakdown on the Prompt Template Dashboard.

Drift

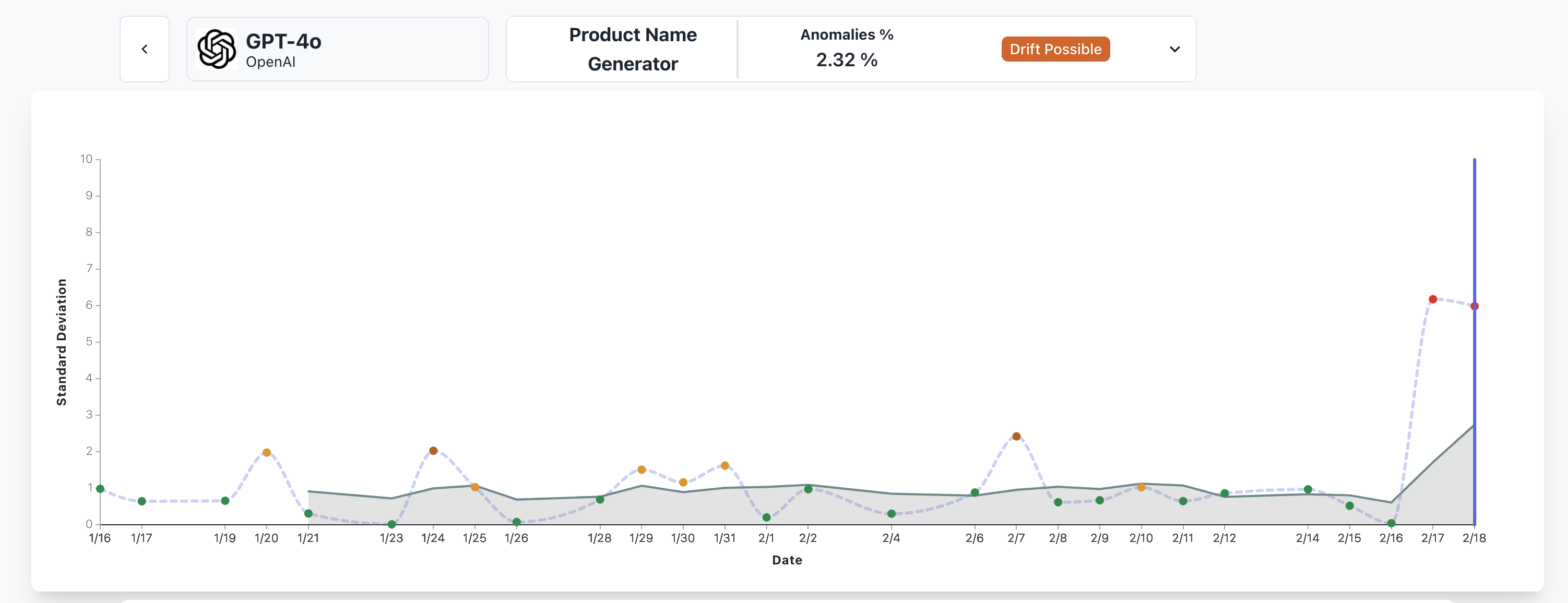

A real concern for any LLM application is Model Drift, where the responses of a given model provider change significantly without any clear indication of how or why. When you've reach the traffic threshold for auto-generating test cases outlined above, Libretto will initiate a separate Drift Dashboard for that Prompt Template.

We start by running all test cases a large number of times against the model you used to determine a baseline response. From there, we'll run these test cases again every day, and measure any potential variance between the baseline response and the new one. We aggregate and chart those variations to determine if model drift is likely.

You can view our conclusion at glance in the Project Dashboard, where each Prompt Template will be labeled as No Drift, Drift Possible, or Drift Likely. If you click on the label, you can reach the Drift Dashboard for a deeper dive into the results.

Prompt Template Dashboard

Now that we've gotten a general overview of how our Project is faring, we can take a deeper look at individual Prompt Templates. If you haven't yet, click on the corresponding Prompt Template row within the Project Dashboard table.

As mentioned above, you'll now see data on individual flagged call categories, for the given timeframe and environment(s).

Below that, you'll notice a table of every call you've sent, including data on cost, latency, and any flags. You can filter the calls for a given flag by clicking on the card for that respective flag at the top.

Search

Using the Search box on the right, you can filter the table for calls containing the entered string. For Free-tiered organizations, you can search for calls the occurred within the previous 7 days. By default, Paid organizations have the ability to search for up to 31 days. Contact us to upgrade.

Call Details

Clicking on a given Call will open up the Call Details page, which will both provide a full breakdown into the detail of the Call, and the Chain context within which it was invoked (if one was provided).

Manually Add Call as Test Case

One of the most important but taxing aspects of testing prompts effectively is gathering a robust set of test cases to evaluate against. And it just so happens that production data is the best source of meaningful test cases.

Either via the prior table or on the Details page, you can click Add Test Case to save this call within your test set for future testing and prompt engineering.

Scored Calls

With your evals manually configured or auto-generated, Libretto will automatically run evals against a sample of your traffic (at a rate of roughly 1 in 20). These calls and their respective evaluation results can be viewed via the Scored tab.

What's Next?

Congrats! If you've reached it this far, you're all set to monitor your LLM traffic, and gain meaningful insights as to where things may have gone wrong. Even better, you've established a solid and crucial foundation to optimize and test your prompts.

With this foundation in place, check out some of our Prompt Engineering tools in the Libretto Playground.